Hi!

>>> print(self.status)3rd year PhD student at AMLab

>>> print(self.supervisors)Max Welling, Jan-Willem van de Meent, and Alfons Hoekstra

>>> print(self.research_interests)hierarchical models, sub-quadratic architectures, scalable geometric deep learning

latest posts

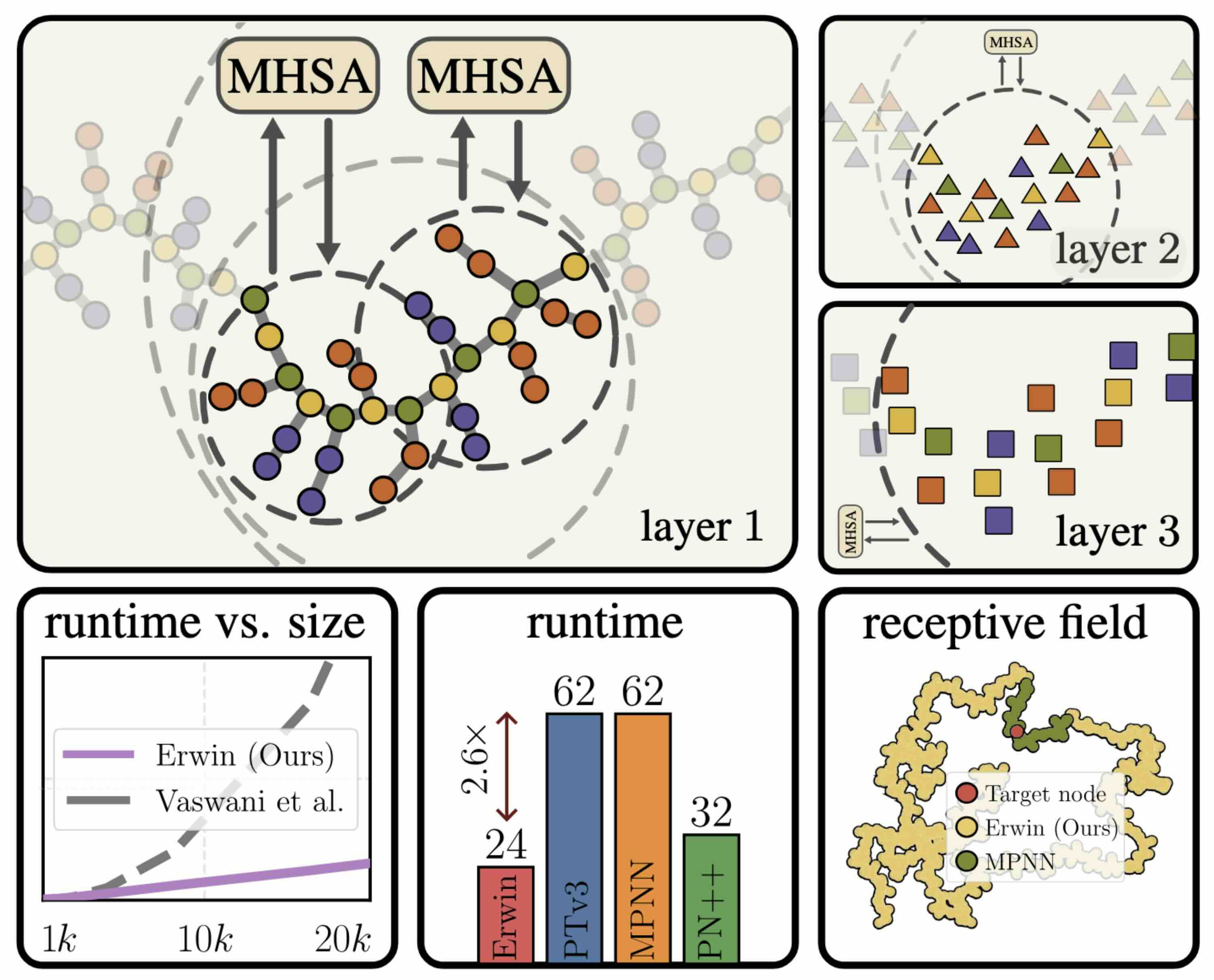

| Jun 2025 | Erwin Transformer |

|---|---|

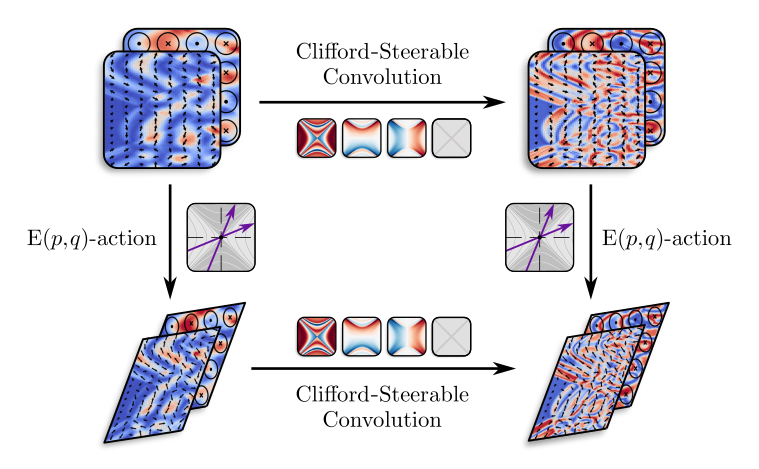

| Aug 2024 | Clifford-Steerable CNNs |

| Oct 2023 | Implicit Steerable Kernels |

selected publications

-

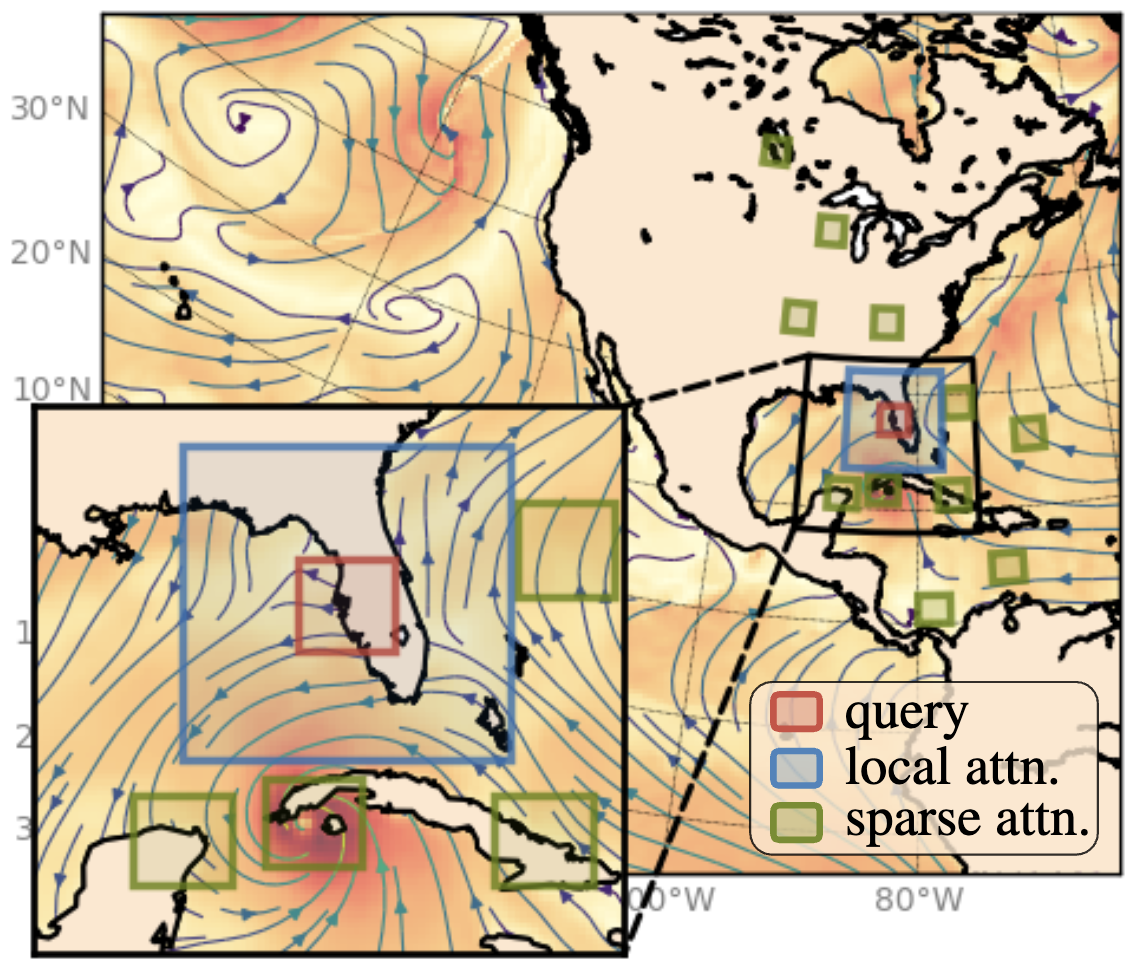

(Sparse) Attention to the Details: Preserving Spectral Fidelity in ML-based Weather Forecasting Modelspreprint

(Sparse) Attention to the Details: Preserving Spectral Fidelity in ML-based Weather Forecasting Modelspreprint

news

| Jan 2026 |

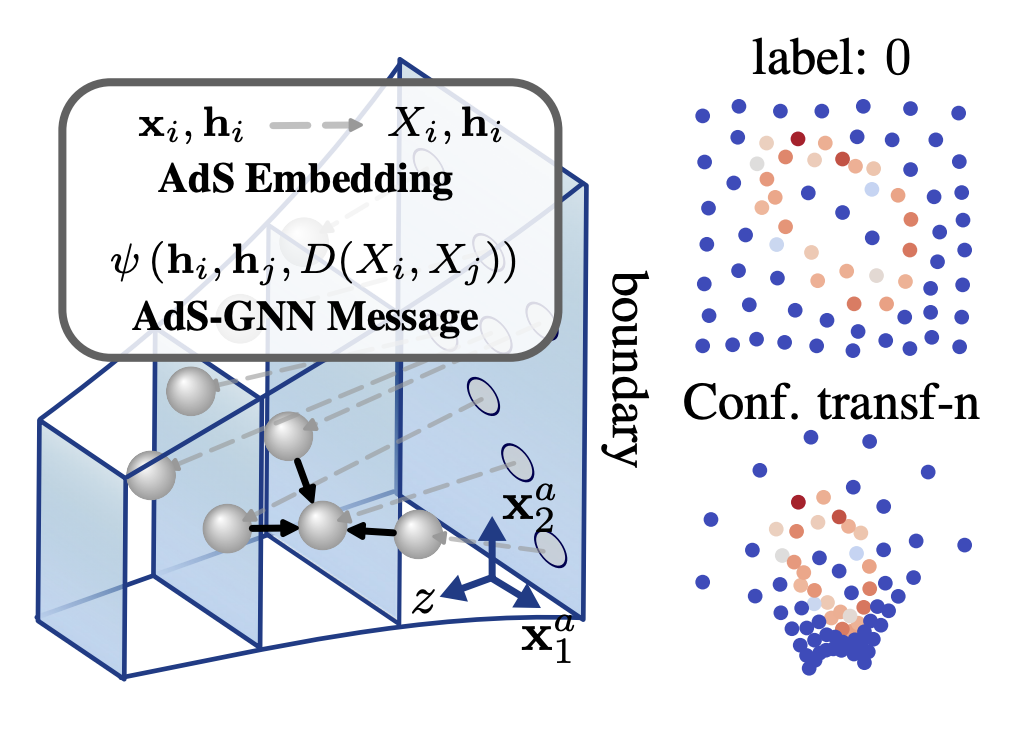

[🥳 Paper accepted] AdS-GNN was accepted to ICLR 2026! See you all in Rio 🇧🇷!

|

|---|---|

| Dec 2025 | [🚨 New paper] We depeloped MSPT - parallelized multi-scale attention method based on hierarchical partitioning of data. It is incredibly fast and achieves SOTA performance on multiple PDE tasks. |

| Dec 2025 | [🥳 Paper accepted] Our submission Adaptive Mesh Quantization for Neural PDE Solvers was accepted to TMLR! We suggest a data-driven way of quantizing message-passing neural networks inspired by speculative decoding. |