Equivariance and feature fields

From the atomic level to the vast expanse of the universe, symmetry and equivariance are consistently observed.

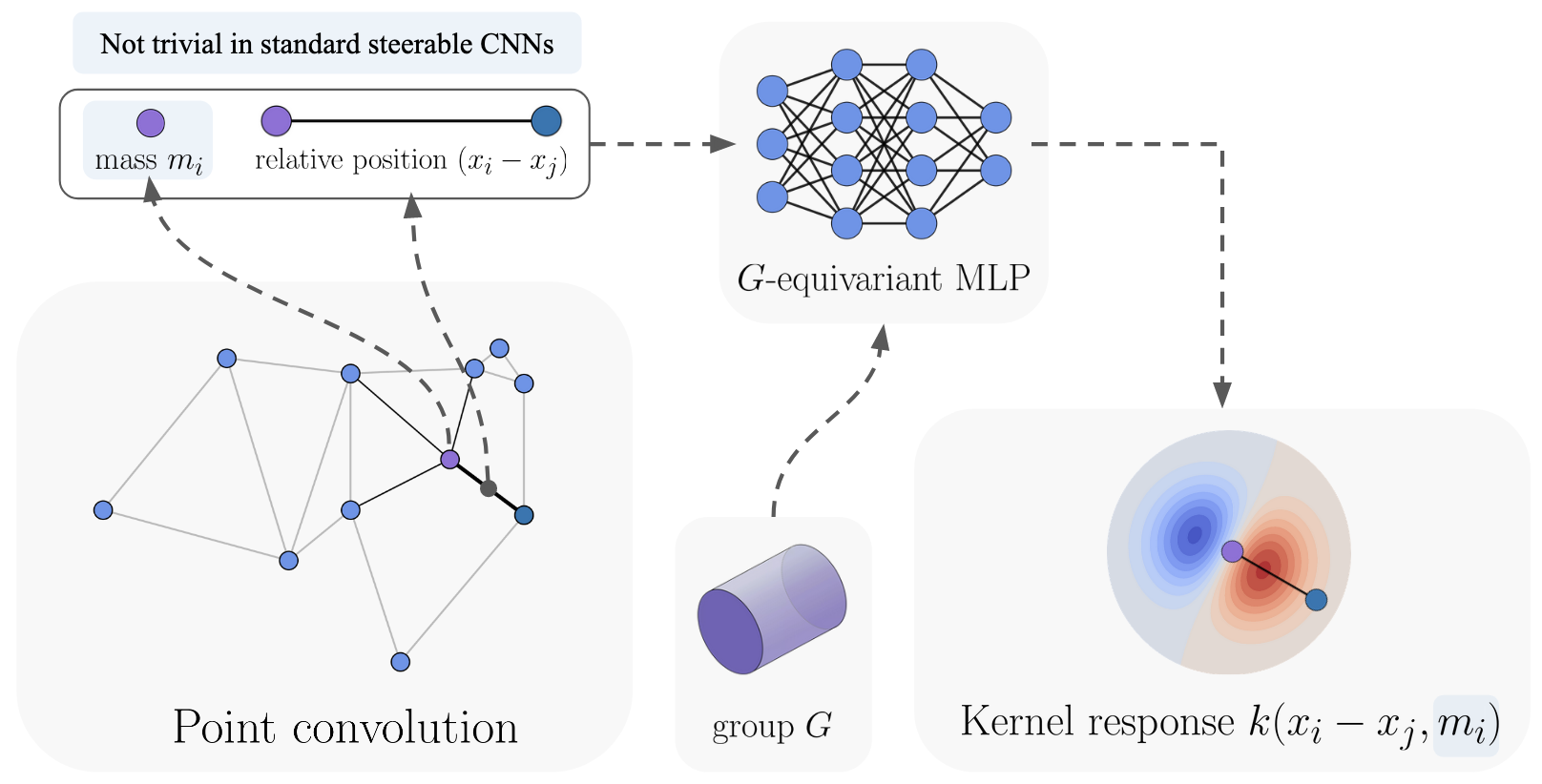

Whether it is the behaviour of molecules or patterns in point clouds, there are often properties

of the system that are preserved under certain transformations.

Equivariant deep learning aims to encode these symmetries directly into the learning process,

yielding more efficient and generalizable models.

Such models are able to preserve certain transformations in the input data through to the model's output.

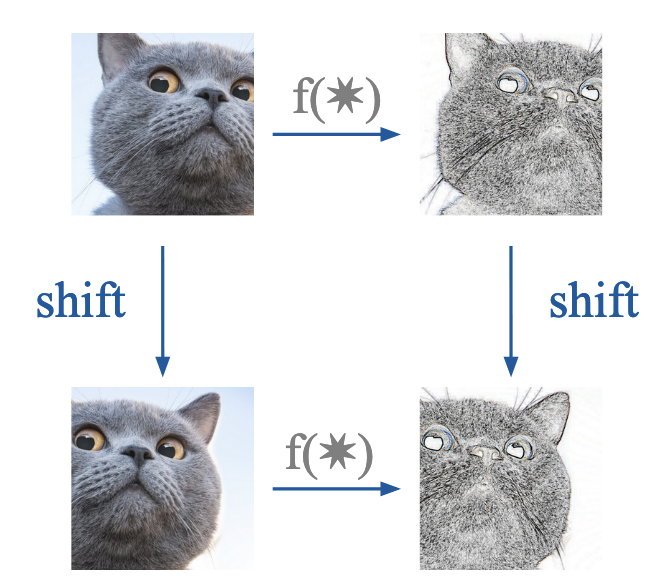

Convolutional Neural Networks (CNNs) serve as a classic example,

being equivariant with respect to translations in the input space (try shifting the image before and after a convolutional layer).

However, to capture a broader range of symmetries found in complex systems,

especially in physics and chemistry, group equivariant CNNs

(G-CNNs) have been developed.

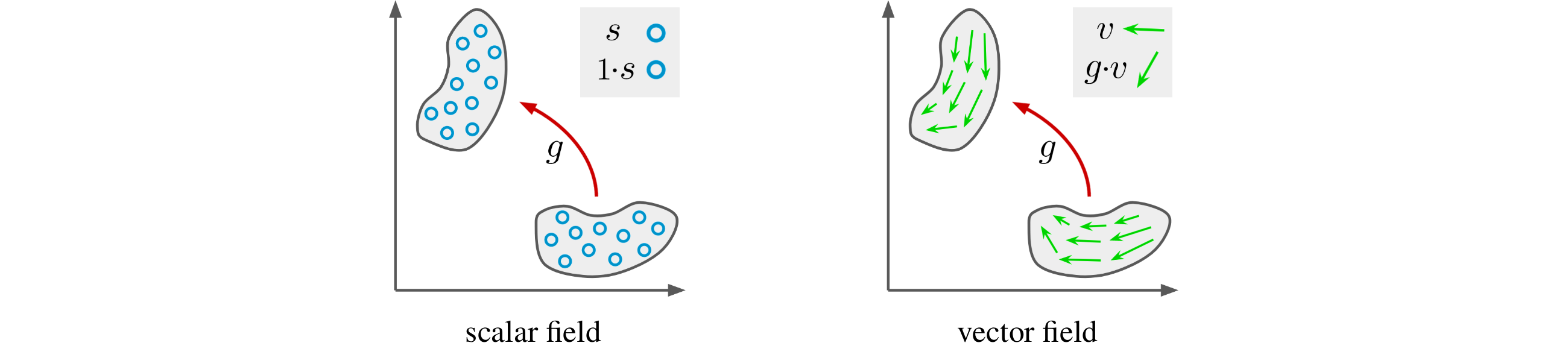

Depending on its type, a field has specific transformation behaviour when subjected to a group element, e.g. a rotation.

This behaviour is described by a group representation, which is a mapping from group elements to linear operators on the field space.

For example, a scalar field is invariant to rotation, and hence, the linear operator is the identity corresponding to the trivial representation.

We furthermore must require that the model respects the transformation laws of input, intermediate and output feature fields,

which is essentially the equivariance constraint covered next.